把数据放到云服务商那里总是感觉不太放心。虽然云服务商动辄号称超过 9 个9的数据持久性,但这肯定没有包括云服务商自己的操作失误。所以,还是把对象存储的数据在本地备份一下比较好。

在这里,我在本地使用 MinIO 构建了一个本地的对象存储。并使用 rclone 工具每天备份一次

原文链接:http://blog.ryjer.com/posts/a11b2263c4.html

1. 目的

定期备份腾讯云上的对象存储桶数据,以防止腾讯出现意外而发生严重数据丢失。(主要是防止腾讯云故意造成的意外)

保护博客专用的对象存储桶。

2. 实现方式

a. 使用 Rclone 的 sync 子指令,配合本地 crond 定期执行。完成定期从对象存储桶到本地 minio 存储桶的同步备份。

b. 使用 MinIO 项目的 mcli 客户端的 mirror 子命令,配合crond 完成定期镜像。不过好像不是增量备份

3. Rclone 实现

3.1 参考资料

Rclone 官网

阿里云 OSS 配置 (rclone.org)

本地文件系统 (rclone.org)

3.2 安装 Rclone

Rclone 本地安装文档

运行以下命令安装稳定版

1

|

curl https://rclone.org/install.sh | sudo bash

|

安装过程信息参考,不同版本的 rclone 可能不同。这个网站本身在国外,访问速度较慢。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

|

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 15 100 15 0 0 1 0 0:00:15 0:00:07 0:00:08 3

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 13.8M 100 13.8M 0 0 98k 0 0:02:24 0:02:24 --:--:-- 182k

Archive: rclone-current-linux-amd64.zip

creating: tmp_unzip_dir_for_rclone/rclone-v1.55.1-linux-amd64/

inflating: tmp_unzip_dir_for_rclone/rclone-v1.55.1-linux-amd64/README.txt [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.55.1-linux-amd64/rclone.1 [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.55.1-linux-amd64/README.html [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.55.1-linux-amd64/git-log.txt [text]

inflating: tmp_unzip_dir_for_rclone/rclone-v1.55.1-linux-amd64/rclone [binary]

正在删除 /usr/share/man 里的旧数据库条目...

mandb: 警告:/usr/share/man/man8/fsck.fat.8.manpage-fix.gz:忽略错误的文件名

正在处理 /usr/share/man 下的手册页...

正在为路径 /usr/share/man/man1 更新索引缓存。请等待...mandb: 警告:/usr/share/man/man1/config.guess.1.gz:用 whatis 解读 config.guess(1) 失败

mandb: 警告:/usr/share/man/man1/config.sub.1.gz:用 whatis 解读 config.sub(1) 失败

正在为路径 /usr/share/man/man3 更新索引缓存。请等待...mandb: 警告:/usr/share/man/man3/function::MKDEF.3stap.gz:用 whatis 解读 function::MKDEF(3stap) 失败

mandb: 警告:/usr/share/man/man3/TAP::Harness::Beyond.3pm.gz:用 whatis 解读 TAP::Harness::Beyond(3pm) 失败

mandb: 警告:/usr/share/man/man3/function::-.3stap.gz:用 whatis 解读 function::-(3stap) 失败

mandb: 警告:/usr/share/man/man3/function::sa_handler.3stap.gz:用 whatis 解读 function::sa_handler(3stap) 失败

mandb: 警告:/usr/share/man/man3/probe::sunrpc.svc.authorise.3stap.gz:用 whatis 解读 probe::sunrpc.svc.authorise(3stap) 失败

正在为路径 /usr/share/man/man8 更新索引缓存。请等待...mandb: 警告:/usr/share/man/man8/fsck.fat.8.manpage-fix.gz:忽略错误的文件名

完成。

正在 /usr/share/man 下查找流浪猫...

正在 /var/cache/man 下查找流浪猫...

正在删除 /usr/share/man/cs 里的旧数据库条目...

正在处理 /usr/share/man/cs 下的手册页...

正在删除 /usr/share/man/da 里的旧数据库条目...

正在处理 /usr/share/man/da 下的手册页...

正在删除 /usr/share/man/de 里的旧数据库条目...

正在处理 /usr/share/man/de 下的手册页...

正在删除 /usr/share/man/fr 里的旧数据库条目...

正在处理 /usr/share/man/fr 下的手册页...

正在删除 /usr/share/man/hu 里的旧数据库条目...

正在处理 /usr/share/man/hu 下的手册页...

正在删除 /usr/share/man/id 里的旧数据库条目...

正在处理 /usr/share/man/id 下的手册页...

正在删除 /usr/share/man/it 里的旧数据库条目...

正在处理 /usr/share/man/it 下的手册页...

正在删除 /usr/share/man/ja 里的旧数据库条目...

正在处理 /usr/share/man/ja 下的手册页...

正在删除 /usr/share/man/ko 里的旧数据库条目...

正在处理 /usr/share/man/ko 下的手册页...

正在删除 /usr/share/man/pl 里的旧数据库条目...

正在处理 /usr/share/man/pl 下的手册页...

正在删除 /usr/share/man/pt_BR 里的旧数据库条目...

正在处理 /usr/share/man/pt_BR 下的手册页...

正在删除 /usr/share/man/ru 里的旧数据库条目...

正在处理 /usr/share/man/ru 下的手册页...

正在删除 /usr/share/man/sv 里的旧数据库条目...

正在处理 /usr/share/man/sv 下的手册页...

正在删除 /usr/share/man/tr 里的旧数据库条目...

正在处理 /usr/share/man/tr 下的手册页...

正在删除 /usr/share/man/zh_CN 里的旧数据库条目...

正在处理 /usr/share/man/zh_CN 下的手册页...

正在删除 /usr/share/man/zh_TW 里的旧数据库条目...

正在处理 /usr/share/man/zh_TW 下的手册页...

正在删除 /usr/share/man/sk 里的旧数据库条目...

正在处理 /usr/share/man/sk 下的手册页...

正在删除 /usr/share/man/es 里的旧数据库条目...

正在处理 /usr/share/man/es 下的手册页...

正在删除 /usr/share/man/nl 里的旧数据库条目...

正在处理 /usr/share/man/nl 下的手册页...

正在删除 /usr/share/man/pt 里的旧数据库条目...

正在处理 /usr/share/man/pt 下的手册页...

正在删除 /usr/local/share/man 里的旧数据库条目...

正在处理 /usr/local/share/man 下的手册页...

正在为路径 /usr/local/share/man/man1 更新索引缓存。请等待...完成。

正在 /usr/local/share/man 下查找流浪猫...

正在 /var/cache/man/local 下查找流浪猫...

5 man subdirectories contained newer manual pages.

553 manual pages were added.

0 stray cats were added.

0 old database entries were purged.

rclone v1.55.1 has successfully installed.

Now run "rclone config" for setup. Check https://rclone.org/docs/ for more details.

|

至于所谓的mandb错误和警告不用管,反正我基本上不会从 man 手册中看 rclone。

3.3 配置 Rclone

安装完 Rclone 后,需要为其配置对象存储账号。使用 rclone config 命令进入 rclone 引导配置:

不同的存储类型参考官方文档 文档 (rclone.org)

配置添加成功后会在当前 linux 系统家目录下创建对应的配置文件,目录树结构如下,其中的 rclone.conf 文件存放你使用 rclone config 输入的配置信息。如果你对这个文件格式比较熟悉的话,可以直接编辑该文件而不用使用 rclone config 命令。

1

2

3

4

|

~/.config/

├── procps

└── rclone

└── rclone.conf

|

我使用的是腾讯云COS 和 本地的MinIO对象存储,对应的参考文档如下

MinIO 配置 (rclone.org)

腾讯 COS 配置 (rclone.org)

4. 配置MinIO节点

添加 MinIO 对象存储节点的过程如下,使用 rclone config 命令进行配置:

1. 选择功能

1

2

3

4

5

6

7

|

[user@localhost ~]$ rclone config

2021/07/12 21:18:21 NOTICE: Config file "/home/jiakun/.config/rclone/rclone.conf" not found - using defaults

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit config

n/s/q>

|

输入 n ,选择 n) New remote ,也就是新建远程节点选项。然后,会让你为节点命名。

1

2

3

4

5

6

|

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit config

n/s/q> n

name> miniolocal

|

我这里将其命名为 miniolocal。

2. 选择存储类型

这一步需要选择节点对应的存储类型,rclone 支持众多类型的存储。甚至支持部分网盘。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

|

name> miniolocal

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Providers including AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, and Tencent COS

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Box

\ "box"

7 / Cache a remote

\ "cache"

8 / Citrix Sharefile

\ "sharefile"

9 / Compress a remote

\ "compress"

10 / Dropbox

\ "dropbox"

11 / Encrypt/Decrypt a remote

\ "crypt"

12 / Enterprise File Fabric

\ "filefabric"

13 / FTP Connection

\ "ftp"

14 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

15 / Google Drive

\ "drive"

16 / Google Photos

\ "google photos"

17 / Hadoop distributed file system

\ "hdfs"

18 / Hubic

\ "hubic"

19 / In memory object storage system.

\ "memory"

20 / Jottacloud

\ "jottacloud"

21 / Koofr

\ "koofr"

22 / Local Disk

\ "local"

23 / Mail.ru Cloud

\ "mailru"

24 / Mega

\ "mega"

25 / Microsoft Azure Blob Storage

\ "azureblob"

26 / Microsoft OneDrive

\ "onedrive"

27 / OpenDrive

\ "opendrive"

28 / OpenStack Swift (Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

29 / Pcloud

\ "pcloud"

30 / Put.io

\ "putio"

31 / QingCloud Object Storage

\ "qingstor"

32 / SSH/SFTP Connection

\ "sftp"

33 / Sugarsync

\ "sugarsync"

34 / Tardigrade Decentralized Cloud Storage

\ "tardigrade"

35 / Transparently chunk/split large files

\ "chunker"

36 / Union merges the contents of several upstream fs

\ "union"

37 / Webdav

\ "webdav"

38 / Yandex Disk

\ "yandex"

39 / Zoho

\ "zoho"

40 / http Connection

\ "http"

41 / premiumize.me

\ "premiumizeme"

42 / seafile

\ "seafile"

Storage>

|

MinIO 属于 S3协议的对象存储,我这里选择 4 。进行下一步子类型选择

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

Storage> 4

** See help for s3 backend at: https://rclone.org/s3/ **

Choose your S3 provider.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amazon Web Services (AWS) S3

\ "AWS"

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ "Alibaba"

3 / Ceph Object Storage

\ "Ceph"

4 / Digital Ocean Spaces

\ "DigitalOcean"

5 / Dreamhost DreamObjects

\ "Dreamhost"

6 / IBM COS S3

\ "IBMCOS"

7 / Minio Object Storage

\ "Minio"

8 / Netease Object Storage (NOS)

\ "Netease"

9 / Scaleway Object Storage

\ "Scaleway"

10 / StackPath Object Storage

\ "StackPath"

11 / Tencent Cloud Object Storage (COS)

\ "TencentCOS"

12 / Wasabi Object Storage

\ "Wasabi"

13 / Any other S3 compatible provider

\ "Other"

provider>

|

MinIO 对象存储为第 7 项,输入 7 。

3. 获取实时 S3 存储凭据

1

2

3

4

5

6

7

8

9

10

|

provider> 7

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Enter a boolean value (true or false). Press Enter for the default ("false").

Choose a number from below, or type in your own value

1 / Enter AWS credentials in the next step

\ "false"

2 / Get AWS credentials from the environment (env vars or IAM)

\ "true"

env_auth>

|

我们使用 MinIO id 和 密码验证方式,这个不用管。直接回车,rclone 下一秒便会要求输入 access_key_id 和 secret_access_key 。

4. 输入 账号 和 密钥

首先,输入账号,也就是 access_key_id

1

2

3

4

5

|

env_auth>

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

access_key_id>

|

我这里的账号名是 myname ,然后输入密钥:

1

2

3

4

5

|

access_key_id> myname

AWS Secret Access Key (password)

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

secret_access_key> mypassword

|

这里我们密钥是 mypassword

5. 选择连接区域

S3 存储协议支持指定存储区域。比如腾讯云就有广州、南京、上海、重庆、四川等区域。我们这里使用的MinIO只有本地一个区域,所以不用选择,直接回车跳过。

1

2

3

4

5

6

7

8

9

10

|

secret_access_key> mypassword

Region to connect to.

Leave blank if you are using an S3 clone and you don't have a region.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Use this if unsure. Will use v4 signatures and an empty region.

\ ""

2 / Use this only if v4 signatures don't work, e.g. pre Jewel/v10 CEPH.

\ "other-v2-signature"

region>

|

6. 输入接入点URL

我这里MinIO的接入点位于本地,URL是 http://localhost:9000 。

1

2

3

4

5

6

|

region>

Endpoint for S3 API.

Required when using an S3 clone.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

endpoint>

|

7. 位置约束设置

这个选项与第5部中的区域向对应,我们第5步没有,所以这一步也没有。直接回车跳过

1

2

3

4

5

|

endpoint> http://localhost:9000

Location constraint - must be set to match the Region.

Leave blank if not sure. Used when creating buckets only.

Enter a string value. Press Enter for the default ("").

location_constraint>

|

8. ACL 访问控制权限设置

用于在 linux 系统中分配权限。我们这里选择默认的 1 "private" ,私人,只允许自己读写。如果你想为本系统中的同组用户或其他用户使用这个节点提供权限,可以选择其他选项。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

location_constraint>

Canned ACL used when creating buckets and storing or copying objects.

This ACL is used for creating objects and if bucket_acl isn't set, for creating buckets too.

For more info visit https://docs.aws.amazon.com/AmazonS3/latest/dev/acl-overview.html#canned-acl

Note that this ACL is applied when server-side copying objects as S3

doesn't copy the ACL from the source but rather writes a fresh one.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Owner gets FULL_CONTROL. No one else has access rights (default).

\ "private"

2 / Owner gets FULL_CONTROL. The AllUsers group gets READ access.

\ "public-read"

/ Owner gets FULL_CONTROL. The AllUsers group gets READ and WRITE access.

3 | Granting this on a bucket is generally not recommended.

\ "public-read-write"

4 / Owner gets FULL_CONTROL. The AuthenticatedUsers group gets READ access.

\ "authenticated-read"

/ Object owner gets FULL_CONTROL. Bucket owner gets READ access.

5 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-read"

/ Both the object owner and the bucket owner get FULL_CONTROL over the object.

6 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-full-control"

acl>

|

9. 服务端加密设置

大部分对象存储都支持在服务端对用户数据进行加密,所以 rclone 也提供了对应选项。下面提供了 AES256 和 aws:kms 两种加密方式

1

2

3

4

5

6

7

8

9

10

11

|

acl>

The server-side encryption algorithm used when storing this object in S3.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / None

\ ""

2 / AES256

\ "AES256"

3 / aws:kms

\ "aws:kms"

server_side_encryption>

|

这里我们不使用服务的加密,直接回车跳过

10. kms 密钥区域选择

如果你上一步选择了 3 aws:kms,则这一步选择加密密钥对应的区域。由于我这里没有选择加密,所以直接回车跳过

1

2

3

4

5

6

7

8

9

|

server_side_encryption>

If using KMS ID you must provide the ARN of Key.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / None

\ ""

2 / arn:aws:kms:*

\ "arn:aws:kms:us-east-1:*"

sse_kms_key_id>

|

11. 高级配置

通常来说,上面的配置已经够用了,这里我不需要高级配置,直接跳过。

1

2

3

4

5

|

sse_kms_key_id>

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n>

|

12. 配置确认

完成以上配置后,你还可以对其进行修改或者删除。这里我配置好了,直接回车确认。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

y/n>

Remote config

--------------------

[miniolocal]

type = s3

provider = Minio

access_key_id = mynane

secret_access_key = mypassword

endpoint = http://localhost:9000

--------------------

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d>

|

13. 选择功能

上一步完成后,会回到初始的功能菜单。这里我们已经配置完了,输入 q 退出即可。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

y/e/d>

Current remotes:

Name Type

`` ``

miniolocal s3

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> q

[user@localhost ~]$

|

完整配置过程

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

|

[user@localhost ~]$ rclone config

2021/07/12 21:18:21 NOTICE: Config file "/home/jiakun/.config/rclone/rclone.conf" not found - using defaults

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit config

n/s/q> n

name> miniolocal

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Providers including AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, and Tencent COS

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Box

\ "box"

7 / Cache a remote

\ "cache"

8 / Citrix Sharefile

\ "sharefile"

9 / Compress a remote

\ "compress"

10 / Dropbox

\ "dropbox"

11 / Encrypt/Decrypt a remote

\ "crypt"

12 / Enterprise File Fabric

\ "filefabric"

13 / FTP Connection

\ "ftp"

14 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

15 / Google Drive

\ "drive"

16 / Google Photos

\ "google photos"

17 / Hadoop distributed file system

\ "hdfs"

18 / Hubic

\ "hubic"

19 / In memory object storage system.

\ "memory"

20 / Jottacloud

\ "jottacloud"

21 / Koofr

\ "koofr"

22 / Local Disk

\ "local"

23 / Mail.ru Cloud

\ "mailru"

24 / Mega

\ "mega"

25 / Microsoft Azure Blob Storage

\ "azureblob"

26 / Microsoft OneDrive

\ "onedrive"

27 / OpenDrive

\ "opendrive"

28 / OpenStack Swift (Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

29 / Pcloud

\ "pcloud"

30 / Put.io

\ "putio"

31 / QingCloud Object Storage

\ "qingstor"

32 / SSH/SFTP Connection

\ "sftp"

33 / Sugarsync

\ "sugarsync"

34 / Tardigrade Decentralized Cloud Storage

\ "tardigrade"

35 / Transparently chunk/split large files

\ "chunker"

36 / Union merges the contents of several upstream fs

\ "union"

37 / Webdav

\ "webdav"

38 / Yandex Disk

\ "yandex"

39 / Zoho

\ "zoho"

40 / http Connection

\ "http"

41 / premiumize.me

\ "premiumizeme"

42 / seafile

\ "seafile"

Storage> 4

** See help for s3 backend at: https://rclone.org/s3/ **

Choose your S3 provider.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amazon Web Services (AWS) S3

\ "AWS"

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ "Alibaba"

3 / Ceph Object Storage

\ "Ceph"

4 / Digital Ocean Spaces

\ "DigitalOcean"

5 / Dreamhost DreamObjects

\ "Dreamhost"

6 / IBM COS S3

\ "IBMCOS"

7 / Minio Object Storage

\ "Minio"

8 / Netease Object Storage (NOS)

\ "Netease"

9 / Scaleway Object Storage

\ "Scaleway"

10 / StackPath Object Storage

\ "StackPath"

11 / Tencent Cloud Object Storage (COS)

\ "TencentCOS"

12 / Wasabi Object Storage

\ "Wasabi"

13 / Any other S3 compatible provider

\ "Other"

provider> 7

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Enter a boolean value (true or false). Press Enter for the default ("false").

Choose a number from below, or type in your own value

1 / Enter AWS credentials in the next step

\ "false"

2 / Get AWS credentials from the environment (env vars or IAM)

\ "true"

env_auth>

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

access_key_id> myname

AWS Secret Access Key (password)

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

secret_access_key> mypassword

Region to connect to.

Leave blank if you are using an S3 clone and you don't have a region.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Use this if unsure. Will use v4 signatures and an empty region.

\ ""

2 / Use this only if v4 signatures don't work, e.g. pre Jewel/v10 CEPH.

\ "other-v2-signature"

region>

Endpoint for S3 API.

Required when using an S3 clone.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

endpoint> http://localhost:9000

Location constraint - must be set to match the Region.

Leave blank if not sure. Used when creating buckets only.

Enter a string value. Press Enter for the default ("").

location_constraint>

Canned ACL used when creating buckets and storing or copying objects.

This ACL is used for creating objects and if bucket_acl isn't set, for creating buckets too.

For more info visit https://docs.aws.amazon.com/AmazonS3/latest/dev/acl-overview.html#canned-acl

Note that this ACL is applied when server-side copying objects as S3

doesn't copy the ACL from the source but rather writes a fresh one.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Owner gets FULL_CONTROL. No one else has access rights (default).

\ "private"

2 / Owner gets FULL_CONTROL. The AllUsers group gets READ access.

\ "public-read"

/ Owner gets FULL_CONTROL. The AllUsers group gets READ and WRITE access.

3 | Granting this on a bucket is generally not recommended.

\ "public-read-write"

4 / Owner gets FULL_CONTROL. The AuthenticatedUsers group gets READ access.

\ "authenticated-read"

/ Object owner gets FULL_CONTROL. Bucket owner gets READ access.

5 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-read"

/ Both the object owner and the bucket owner get FULL_CONTROL over the object.

6 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-full-control"

acl>

The server-side encryption algorithm used when storing this object in S3.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / None

\ ""

2 / AES256

\ "AES256"

3 / aws:kms

\ "aws:kms"

server_side_encryption>

If using KMS ID you must provide the ARN of Key.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / None

\ ""

2 / arn:aws:kms:*

\ "arn:aws:kms:us-east-1:*"

sse_kms_key_id>

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n>

Remote config

--------------------

[miniolocal]

type = s3

provider = Minio

access_key_id = mynane

secret_access_key = mypassword

endpoint = http://localhost:9000

--------------------

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d>

Current remotes:

Name Type

`` ``

miniolocal s3

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> q

[user@localhost ~]$

|

这会在 ~/.config/rclone/rclone.conf 配置文件中添加如下节点配置:

1

2

3

4

5

6

7

|

[user@localhost rclone]$ cat ~/.config/rclone/rclone.conf

[miniolocal]

type = s3

provider = Minio

access_key_id = myname

secret_access_key = mypassword

endpoint = http://localhost:9000

|

5. 配置腾讯云COS节点

5.1 创建腾讯云COS子账号

建议使用子账号保护云资源。这里只需要一个对源存储桶拥有 只读 权限的子账号即可。

5.2 授予子账号存储桶读取权限

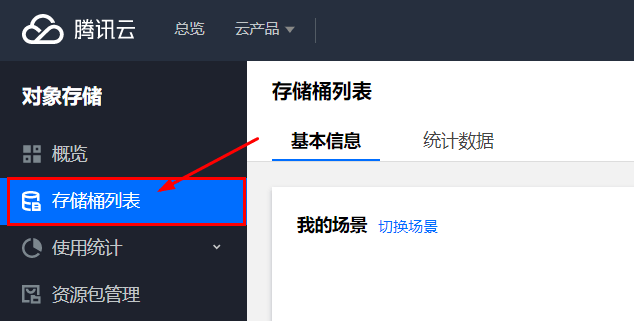

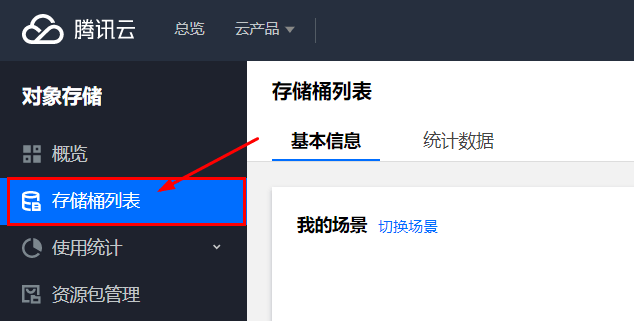

在腾讯云中进入 控制台 > 对象存储 > 存储桶列表 > 你要同步的存储桶

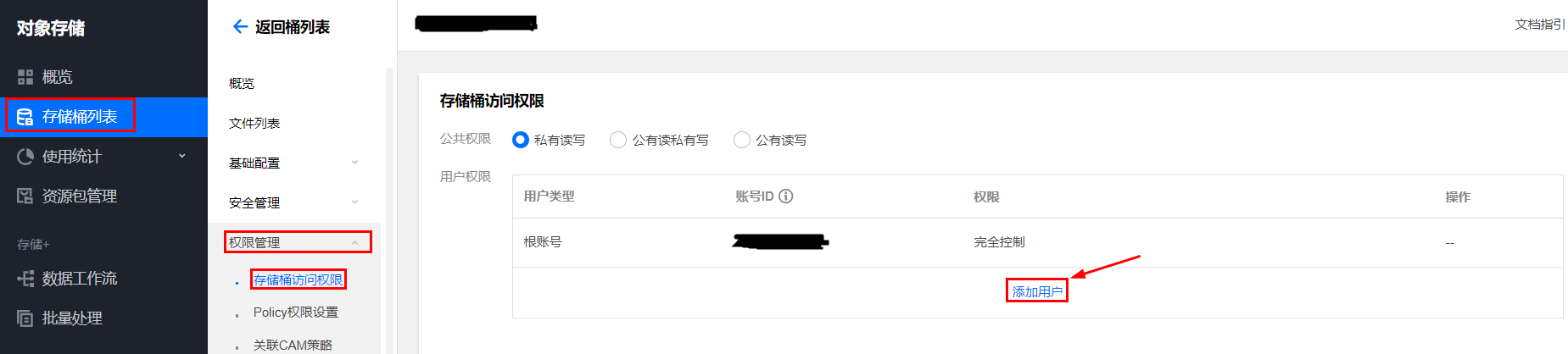

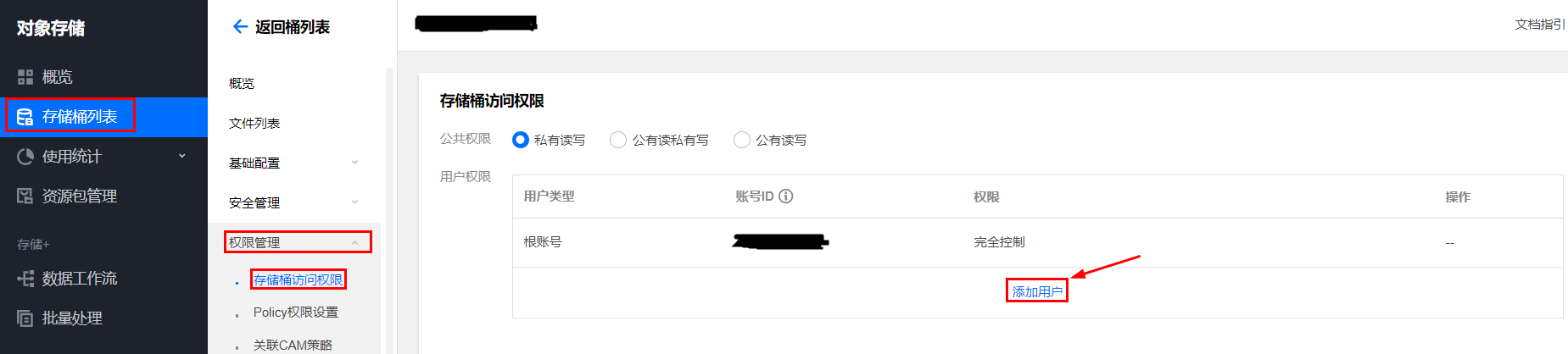

然后,在 权限管理 > 存储桶访问权限 里点击 添加用户 。

选择用户类型为 子账号 ,将刚刚创建的 子账号 添加进去,授予其如下图所示 数据读取 和 权限读取 权限。然后点击 保存 完成授权

接下来,你就可以使用这个子账号操作读取这个存储桶了。

5.2 配置 Rclone 配置

腾讯云cos 的配置与 minio 类似。

但在第2步选择存储类型 后还要选择区域。这里需要选择你要同步的存储桶所在地 编号。

在第 4 步的 账号 和 密钥 选择上面子账号下的 SecretId 和 SecretKey

6. 调试同步命令

我们使用 sync 子命令将 腾讯云COS上的存储桶同步到minio存储桶中,可先使用 -i 参数进行测试,对应命令格式如下

1

|

rclone sync -i remote:SOURCE DESTINATION

|

这里的remote指的是上一步 rclone config 中为各存储桶设置的节点别名。当你使用本地文件系统时,不需要使用 remote 。就像上面的 DESTINATION 一样。由于我是将远程存储桶同步到本地存储桶,所以对应两个存储桶都需要节点名。命令大概会像下面这样

1

|

rclone sync -i 源节点名:源存储桶 目标节点名:目标存储桶

|

当你测试完确定命令没有问题后,可以去掉测试选项 -i 。进行真正的完全同步,sync 同步子命令只会传输 发生变动 的对象/文件,没有发生变动的 对象/文件 不会产生流量和外网下载流量费。

1

|

rclone sync gzcos:blog-88888888 miniolocal:blog

|

通常情况下,同步命令会将存储桶对象的创建时间等元数据一起同步过来(MinIO支持)。但是有的对象存储服务商(比如这腾讯COS)对创建时间等信息控制较为严格,即使使用 rclone sync 命令也无法同步对象的创建时间。使得目标存储桶对象的创建时间是复制到存储桶的时间而与源存储桶中的信息不同,但这并不影响使用。

7. 添加定时任务

注意:不要忘记开启 Minio 的版本控制功能,minio开启版本控制需要至少四个驱动器的 纠删码 部署。单驱动器不支持

调试好命令后,使用 crontab 命令添加定时任务

我添加了如下一条 crontab 记录,用于每天 7:00 进行一次同步

1

|

0 7 * * * rclone sync gzcos:blog-88888888 miniolocal:blog

|

这样,就完成了 rclone 定时同步备份的设置。